DeepScribe Outperforms GPT-4 by 59% on AI Medical Scribing: A Benchmark Study

DeepScribe AI, trained on domain-specific data from millions of clinical encounters, significantly outperforms GPT-4.

To download and read the complete white paper, click here.

Abstract

This benchmark case study aimed to assess the performance of OpenAI’s GPT-4 in a real-world medical environment and compare it to DeepScribe’s specialized documentation engine, which uses GPT-4 alongside domain-specific models and data. The study analyzes deidentified patient-doctor conversations with challenging input characteristics such as noise pollution, long transcripts, and complex reasoning, and focuses on the critical defect rate of both solutions. Results revealed that DeepScribe was 59% more clinically accurate than GPT-4. Examples in this study highlighted how DeepScribe excelled in capturing crucial domain-specific details for healthcare acceptance, while GPT-4 exhibited shortcomings due to a lack of medical data training.

Introduction

It’s an understatement to say that OpenAI’s ChatGPT is the biggest breakthrough in NLP, perhaps AI in general. In just a few short months, it has brought AI to the forefront of our national discourse and reshaped how global leaders are thinking about the future of work, economics, education, and much more. At DeepScribe, access to GPT-4 (OpenAI’s latest large language model) has opened new doors. With robust prompt engineering focused on making GPT-4 behave and reason like a medical scribe, we’ve been able to implement it alongside our existing models to catapult us towards a fully-automated solution. Over recent months, however, it’s become apparent that not all competitors are taking this tactical approach, and are instead leaning on GPT-4 as the primary model in their scribing solutions.This adoption raises a host of questions about accuracy, security, and safe deployment. We know GPT-4 is the most powerful LLM available, but the principal question we’re asking is: How does GPT-4 fare in healthcare without access to domain-specific data?Before we dive into the methodology of how we answered this question, let’s first consider why automating medical documentation is such a difficult problem to solve.

Hard Input

Hard input refers to input data that is difficult for an AI system to process or interpret. In AI documentation, there are three elements of hard input that complicate the process: Noise Pollution, Long Transcripts, and Complex Reasoning.

Noise Pollution

Even with the best automatic speech recognition (ASR) technology, crosstalk, noisy environments, enunciation, and faulty acoustic capture can be difficult for AI to interpret. There are hundreds of edge cases: interrupted patient answers, multiple historians, dosages of undiscussed medications, and much more.

Long Transcripts

AI performs well on short patient encounters. Long, meandering patient visits filled with medical nuances make it difficult for an AI to deem what is relevant and irrelevant.

Complex Reasoning

Patient encounters involve intricate medical conditions, multiple comorbidities, and a wide range of symptoms and treatments. This complexity poses a significant challenge for AI systems attempting to automate medical documentation. Should the AI include the context behind why the patient took Aleve or should it leave it out? Should it associate beta blockers with hypertension or anxiety?

Low Error Tolerance

Errors can have profound negative effects on patients, clinicians, and entire practices.

Disjointed Concepts

With naturally disjointed conversations, AI systems are left with disjointed, non-continuous data. These systems must then take this disjointed data, with disjointed concepts and use it to paint a picture that is highly accurate.

Methodology

A central focus of this benchmark study was to step outside of the demo environment and measure AI performance “in the wild.” As such, we gathered a sample of deidentified patient transcripts captured on the DeepScribe platform — those rife with the hard input challenges mentioned above — and wrote standardized notes using rubrics that we’ve calibrated through feedback from thousands of clinicians. These standardized notes were then audited and reviewed by a panel of analysts to ensure they met accuracy standards. These notes became the ground truth for this study and the benchmark for which we compared the outputs of DeepScribe and GPT-4.

For added context, DeepScribe’s note-generation stack consists of fine-tuned large language models and several task-specific models that are trained on millions of labeled patient conversations. Recently, we have incorporated GPT-4 into this stack to do what it does best: manipulate language and extract information. We leverage all of these models to achieve the global maxima.

The GPT-4 instance in this benchmark study consists of the same GPT-4 prompts we use in our stack, but run in isolation instead of alongside our models. We consider it sufficiently extensive in its exploitation of SOTA techniques to achieve the best possible GPT-4 performance on this task. This includes heavy prompt-engineering to dissect the note into its constituent concepts, specificity in information extraction, reflection, example-based inference, and prompt chaining among other common techniques.

Note outputs from both platforms were anonymized and sent through the same grading process where defects were categorized, labeled, and then compared against the standardized note.

While both models showed tendencies to produce minor errors related to grammar and duplications, this test focused primarily on the prevalence of “critical defects” such as AI hallucinations, missing information, incorrect information, and contradictions. By filtering out minor errors that do not compromise the clinical integrity of a medical note, we were able to get a more distilled view of the clinical viability of both AI solutions.

Results

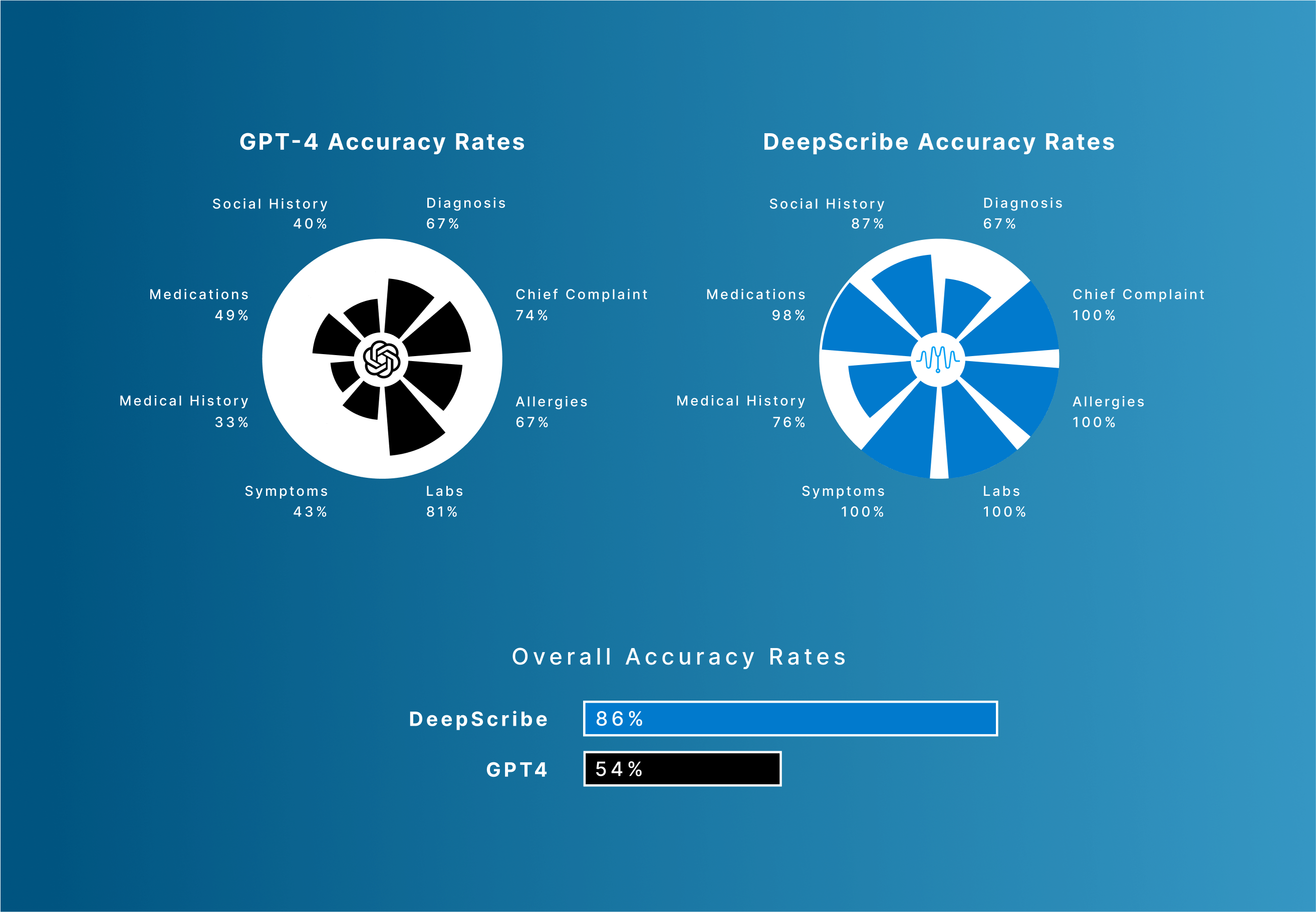

DeepScribe is 59% more accurate than GPT-4 when it comes to the clinical accuracy of AI-generated medical notes.

Among critical note categories, here's how the two solutions performed when compared to accuracy and acceptability standards:

Examples

The key difference between GPT-4 and DeepScribe is training and tuning on domain-specific data, which is apparent in the examples below. GPT-4, to its credit, successfully captures high-level topics, but does not include the supporting details that are vital to healthcare acceptance.

Example 1:

DeepScribe’s note successfully documents the urine test, the results (“3+ level glucose”) and the medical rationale behind the high glucose level (new diabetes medication, Farxiga). While GPT-4 does successfully identify the new diabetes medication and hearing test, it misses the other relevant information.

Example 2:

GPT-4’s output does not recognize the patient’s lack of heart palpitations and their oxygen saturation levels. Additionally, it lacks qualifiers such as “laying down” and its relationship with nighttime wheezing. Finally, changes in bladder function, types of OTC medications, and important context such as “blood while sneezing” versus “bloody nose while sneezing.”

Conclusions

There are a few conclusions that we've gathered from the audit of both solutions.

DeepScribe’s Specialized Solution is the Difference

DeepScribe's AI performed with significantly higher medical accuracy than GPT-4, the reason for which is relatively straight forward:

DeepScribe is trained on millions of real medical encounters that discuss real medical conditions with real medical nuances. GPT-4 is not. It is this vast dataset that allows DeepScribe to arrive at more medically accurate conclusions and perform significantly better than AI-powered scribing tools that use GPT-4 as the primary model.

Without access to the clinical data or specialized models that inform medical conclusions, GPT-4 can’t be expected to consistently deliver highly accurate documentation. GPT-4’s medical note outputs have a tendency to correctly mirror real medical notes (formatting, style, etc.), but we found the medical accuracy of those outputs to be flawed when examined closely.

To download and read the complete white paper, click here.

text

Related Stories

Realize the full potential of Healthcare AI with DeepScribe

Explore how DeepScribe’s customizable ambient AI platform can help you save time, improve patient care, and maximize revenue.